Data Center Cooling Strategies: Air, Liquid, and Direct-to-Chip Systems Explained

AI, HPC, and dense cloud workloads are quietly rewriting the rules of data center cooling. Power densities that used to plateau at 5–10 kW per rack are now routinely 30–80 kW, and AI clusters can push 80–120 kW per rack or more.

At the same time, the global average PUE has been stuck in the 1.55–1.60 band for years, meaning cooling and other overheads still consume a large share of site energy. Cooling alone can represent 20–50% of a data center’s total electricity use, making it one of the biggest levers for both cost and sustainability.

In this context, understanding how you cool – and how you model and coordinate those systems – is now a strategic design decision, not an afterthought.

1. Air-Based Cooling: CRAC/CRAH, In-Row, and Rear-Door Systems

Traditional CRAC / CRAH + room-level air

The classic model is familiar:

-

- CRAC (Computer Room Air Conditioner) units use DX refrigerant systems

- CRAH (Computer Room Air Handler) units use chilled water coils fed from central plant

- Air is supplied via raised floor or overhead, organized into hot/cold aisles, with return air back to the units

With good airflow management and containment, traditional air systems can handle typical densities of 5–15 kW per rack, sometimes stretching toward 20–30 kW in well-optimized environments.

ASHRAE TC9.9 thermal guidelines have gradually widened allowable inlet temperature ranges to enable more economization (using outside air / higher chilled water temperatures), improving overall efficiency without compromising IT reliability when done correctly.

In-row and overhead cooling

To push air cooling further, many facilities deploy in-row cooling units:

-

- Small DX or chilled-water units placed between racks in the aisle

- Shorter air paths from coil to IT inlet, reducing mixing and fan power

- Typically used in high-density pods (e.g., 20–40 kW per rack)

Similar ideas show up as overhead coolers or “thermal walls,” bringing coils closer to the heat source and tightening control of air paths.

Rear-door heat exchangers (RDHx)

Active rear-door heat exchangers mount directly on the back of racks:

-

- Hot exhaust air passes through a chilled-water coil integrated into the door

- Heat is removed at the rack, and near-neutral air is discharged into the room

- This significantly reduces room-level thermal burden

RDHx can enable 30–50 kW per rack (and beyond in some designs) using air at the IT interface but water a few centimeters away, often as a stepping stone toward fuller liquid cooling.

2. Liquid Cooling: From Room Loops to Direct-to-Chip and Immersion

Air’s fundamental limitation is its low heat capacity. Water and engineered coolants have thousands of times higher heat transfer capacity than air, which is why liquid cooling is moving from niche to mainstream in high-density environments.

Liquid-assisted air: loops feeding in-row & RDHx

The first step toward liquid is often at rack or row level:

-

- Chilled water loops feed in-row coolers or RDHx

- Air still cools the IT directly; liquid removes heat from coils near the rack

- The room becomes more of a “buffer” environment, easing constraints on layout

This hybrid model can bridge traditional air-cooled halls and pockets of high-density racks, without requiring full-scale liquid-to-chip adoption on day one.

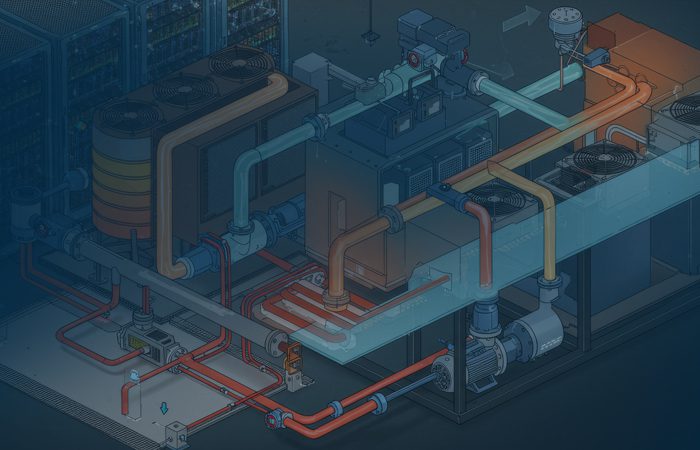

Direct-to-chip (D2C) / cold-plate cooling

Direct liquid cooling (DLC) or direct-to-chip uses cold plates mounted on CPUs, GPUs and other high-power components:

-

- Coolant flows through cold plates in direct contact with the chip package

- A rack or row manifold distributes and collects coolant, feeding a CDU (cooling distribution unit)

- CDUs connect to facility water, often via liquid–liquid heat exchangers

DLC is now regarded as a preferred method for extreme chip power densities and racks in the 30–80 kW+ range, especially for AI and HPC workloads.

Key design considerations include:

-

- Coolant chemistry and wetted materials (to avoid corrosion and contamination)

- Redundancy and failover paths in the coolant network

- Leak detection, drip trays, and structured routing to protect other equipment

Immersion cooling

Immersion cooling goes a step further:

-

- Servers are submerged in a dielectric fluid (single-phase or two-phase)

- Heat from components is absorbed by the fluid and transferred via heat exchangers

- Rack power densities of 100 kW are common, with some dual-phase systems exceeding 150 kW per rack.

Immersion dramatically reduces or eliminates server-level fans and can enable very high density in a compact footprint. However, it has significant implications for:

-

- Service workflows (how you swap components in/out of tanks)

- Hardware choices (immersion-ready hardware & materials)

- Integration with existing facility cooling and fire protection philosophies

The emerging pattern in many hyperscale / AI builds is hybrid: air-cooled for general-purpose compute, liquid-assisted for moderate density, and DLC / immersion for AI training clusters.

3. Efficiency, PUE, and the Cost of Cooling

Despite technological progress, Uptime Institute’s surveys show that average global PUE has remained stubbornly flat around 1.55–1.58 for much of the last decade.

At the same time, the IEA and other bodies project that data center power consumption could reach 800–1,050 TWh by 2026, a ~75% increase over 2022. Cooling is responsible for roughly 20–50% of that total in many facilities.

Liquid and hybrid cooling approaches can improve efficiency in several ways:

-

- Higher coolant temperatures → more free cooling opportunities and better chiller efficiency

- Reduced fan power → fans in IT and CRACs/CRAHs can be down-sized or eliminated

- Targeted cooling → less overcooling of entire rooms for a few hot racks

Studies and vendor deployments report double-digit reductions in cooling energy and meaningful PUE improvements when well-designed liquid cooling is adopted, particularly for AI clusters. One study even cites up to ~21% emissions reduction from shifting to liquid cooling in certain setups.

The message: moving up the cooling “maturity curve” is not just about thermal headroom; it’s a major energy and carbon lever.

4. Designing for High-Density Racks (30–80 kW+)

Once rack densities consistently cross 30 kW and approach 80 kW+, you’re in a different design regime.

Key implications:

-

- Topology choices

- Air-only with advanced containment and RDHx may work up to certain densities

- Beyond that, you’re likely in hybrid (air + RDHx / in-row) or full DLC / immersion territory

- Hydraulic and thermal modeling

- Temperature set-points and ΔT targets along the liquid path (chip → cold plate → manifold → CDU → facility water)

- Pressure drop, pump selection, and redundancy in the loop

- Mechanical–electrical–controls coordination

- Power distribution must be aligned with rack density, redundancy (N, N+1, 2N), and cooling capabilities

- Controls logic for fans, pumps, valves, CDUs, chillers and economizers must be consistent and thoroughly tested

- Safety and risk management

- Leak detection (sensors, drip trays, isolation valves) integrated into the DCIM/BMS

- Materials compatibility with coolants, and fail-safe behavior under loss-of-coolant scenarios

- Topology choices

ASHRAE TC 9.9 has steadily expanded its guidance to cover high-density air-cooled classes (e.g., H1) and liquid-cooled equipment, setting expectations for acceptable inlet conditions and integration patterns.

Design teams now need to think beyond “putting in the right units.” They need multi-domain models that reflect how air, liquid, power, and controls interact at pod, room, and building levels.

5. TAAL Tech POV: BIM + MEP Partner for Complex Cooling Topologies

As cooling architectures become more complex, the quality of the BIM and MEP model becomes just as critical as the choice of chiller or CDU – especially for hyperscale, colocation, and AI-focused clients (think Quark, Amazon, Ethos-type organizations).

TAAL Tech positions itself as a data center BIM + MEP engineering partner focused on making these cooling strategies buildable, coordinated, and OEM-ready.

Concretely, that means:

-

- High-fidelity cooling topology modeling

- Detailed Revit / BIM models capturing coolant loops, manifolds, CDUs, RDHx, in-row coolers, overhead coils

- Accurate pipe routing with support systems, slopes (where needed), valve locations, and redundancy paths

- Representing containment zones (hot/cold aisles, pods, immersion tanks) as first-class BIM objects

- Mechanical–electrical–controls integration

- Coordinated MEP models linking cooling plant, pump rooms, white space piping, and electrical distribution

- Controls architectures modeled to align with OEM standards (e.g., Schneider, Vertiv) and ASHRAE TC 9.9 guidance

- Embedded data tags for flow, temperature, and redundancy attributes to support downstream commissioning & DCIM integration

- Simulation-ready geometry and data

- Creating CFD-ready geometry and attributes for partners or in-house teams to run thermal simulations at rack / pod level

- Using simulation outcomes to refine containment strategies, set-points, and aisle-level airflow/liquid flow distribution

- Standards and library development

- Developing reusable families and detail libraries for CRAC/CRAH units, RDHx, pipe fittings, quick-connects, leak-detection components, etc.

- Standardizing naming, parameters, and metadata so that models are consistent across regions and projects

- High-fidelity cooling topology modeling

For design firms, hyperscalers, and operators, this allows TAAL Tech to act as a scalable execution arm: once the cooling playbook is defined, TAAL Tech can replicate and adapt it across multiple sites, regions, and phases – from concept to IFC and as-built.

Closing: Cooling as a Strategic Design Lever

As AI and high-density compute reshape data center loads, cooling is no longer a “facility support system” in the background. It is:

-

- A capacity limiter for how much compute you can deploy in a given footprint

- A top-three contributor to energy and carbon performance

- A complex integration problem spanning mechanical, electrical, controls, OEM hardware, and operational workflows

Air-based CRAC/CRAH systems, in-row units, RDHx, DLC cold plates, and immersion tanks are all tools in the same toolbox. The real differentiator is how intelligently you combine them – and how precisely you model and coordinate that combination.

For organizations, partnering with a BIM + MEP specialist like TAAL Tech means being able to:

-

- Confidently design for 30–80 kW+ racks and AI clusters

- Quantify PUE and energy impacts early in the design process

- Deliver fully coordinated mechanical–electrical–controls models that slot cleanly into OEM ecosystems and ASHRAE-aligned best practices

In short: the future of data center cooling is hybrid, liquid-heavy, and model-driven. TAAL Tech’s role is to help you design and document it with the precision, coordination, and scalability that high-density data centers now demand.